After the launch of ChatGPT, the world turned its attention to the rapid evolution of artificial intelligence. We were all impressed by the algorithm’s ability to generate texts so naturally with interpretation and action execution.

In addition to search engine integration, we should see a lot of evolution in the ease of using the technology in several applications. The question that remains is how far can AI go? What can (or can’t) be replaced by an algorithm?

What is artificial intelligence?

Artificial intelligence (AI) is a field of computer science that focuses on the development of algorithms and systems capable of performing tasks that normally require human intelligence, such as learning, reasoning, perceiving, recognizing speech, vision, and natural language. In short, AI allows computers to process information “intelligently” and make decisions based on data.

This “intelligence” is obtained through a large amount of data that is trained and increases the decision capacity. So, to understand where this all might (or might not) stop, it is necessary to undestand how data is processed and used.

What is “GPT”? (Generative Pre-trained Transformer)

GPT is a pre-trained language model that uses the Transformer architecture, introduced by Google in 2017, to generate text. GPT-3, which is used by ChatGPT, was released in 2020 is the third version of the model and the most powerful one to date.

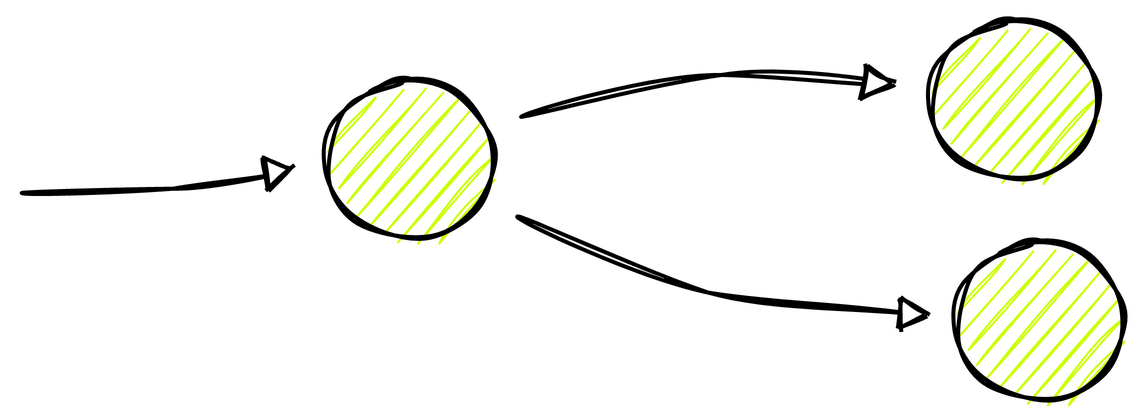

Training is done through a “neural network” that works as if they were isolated neurons. During learning, a neuron receives data and perform necessary calculations and forward an output to the next level neurons. Within each neuron, calculations adjusted with the parameters are performed.

Each GPT version had its definition of parameters and connections between them. Parameters are like ingredients in a recipe that can influence the final result, such as color, texture, taste, temperature, etc… Connections, on the other hand, are the form of interaction between parameters and the adjustment of these combinations lead to a more accurate final result. (or less) assertive.

See the difference in evolution of different versions of GPT.

- GPT-1: 117 million parameters

- GPT-2: 1.2 billion parameters

- GPT-3: 175 billion parameters

As complexity increases, much more processing power is required, making it even more difficult to train incrementally with new data. For this reason, ChatGPT was trained only once with data up to 2021.

GPT is totally focused on generating text with probability calculation of what will be the next words to be inserted. The choice of the next word depends on the previous context, repetition, among many other variables.

GPT Limitations

- Pre-training: Once trained, the system can generate information from the content that was inserted but will not be able to consider new information. Incremental training is possible, not yet used in ChatGPT on new conversations.

- Input data limit: The architecture has an input data limit of 2048 tokens (full words or chunks). This means that it is not possible to enter a large amount of data and this may limit some applications.

- Bias: If the model is trained with data from only one source, it may have bias. This is a big problem that can lead to wrong conclusions, prejudices or even opinions about controversial topics. Thus, it requires additional training from different sources or manually collected data to improve the model.

- Inference time: The inference time is the time it takes the model to generate the response. GPT-3 ends up taking a long time to generate a result, which can also be a problem for some applications.

Of course, there are other limitations such as processing cost and how fast new data can be added.

Where can artificial intelligence go?

There are rumors that GPT-4 will be released in 2023 and will have a few trillion parameters capable of producing even more assertive and much longer texts, in addition to input with more tokens.

We should see more evolution in “Multimodal”, that’s the ability to process (and generate) data from different sources like text, image, audio, video, etc… The DALL-E is a good example of a multimodal AI that can generate images from texts, also from OpenAI.

The term GPT has become popular in recent weeks but there are much more advanced concepts such as AGI (Artificial General Intelligence) which is the ability of a system to process and learn data similarly to a human being. AGI is a much broader and more complex concept than GPT and it may take a few (few) years for us to see something closer to that.